Last year the vendor announced its predecessor, the Drive PX. “Several thousand man years have gone into advances in self-driving cars at Nvidia over the last year,” said CEO Jens-Hsun-Huang (pictured).

However, he acknowledged developing a car that can perceive, and negotiate, obstacles for itself on busy streets is not straightforward.

“Self-driving cars is hard,” he said, referring to changeable factors such as roadworks and pedestrians. “It can be chaotic, complex and sometimes hazardous”.

The solution, he argued, is to use Artificial Intelligence (AI) to enable major advances in areas such as image recognition, which are central to autonomous driving. Drive PX2 is based on AI.

Nvidia’s strategy involves a so-called deep learning platform for self-driving cars, involving a cloud-connected world where an individual vehicle reports back to the wider network about changing conditions. The network then updates other cars on the road.

Nvidia’s deep learning technology consists of Drive PX2 as well as its Digits tool. A number of carmakers including BMW, Daimler and Ford are using Nvidia’s deep learning technology to develop their own self-driving technology, Nvidia’s chief explained. However, Reuters reports that Volvo Car Group will be the new device’s first customer.

Partnerships between automakers and Silicon Valley companies on self-driving technologies are taking centre stage at this year’s show.

Also on Monday, General Motors announced a $500 million investment in ride-sharing service Lyft.

The almost forgotten industry jargon "artificial intelligence" or AI is back in the scene. As car makers are working hard on self-driving car technologies, machine-learning software program AI technology is emerging one of the most crucial technology enablers for the autonomous cars.

A case in point is Nvidia's Drive PX2 platform in what the chip maker said is the world's most powerful engine for in-vehicle artificial intelligence.

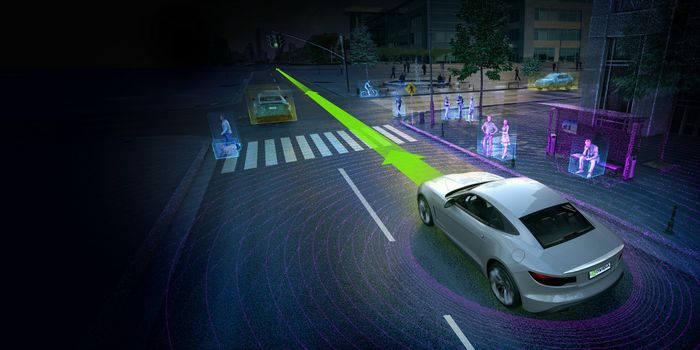

Coming built with Nvidia's next generation of Pascal architecture-based GPU processors and two next generation of Tegra processors, the Drive PX 2 platform runs on its indigenous deep machine algorithm for 360 degree situational awareness around the car to determine precisely where the car is and to compute a safe, comfortable trajectory.

"Drivers deal with an infinitely complex world," said Jen Hsun Huang, co-founder and CEO with Nvidia. "Modern artificial intelligence and GPU breakthroughs enable us to finally tackle the daunting challenges of self-driving cars. Our GPU is central to advances in deep learning and supercomputing. We are leveraging these to create the brain of the future autonomous vehicles."

True enough, self-driving cars will require supercomputer-like daunting computing powers, as they will come mounted with a dozen of vision sensors, radars, and ultra sonic sensors to monitor and understand what's going on around the car. Processing these tones of data-heavy video data and signals require daunting computing power. The Drive PX 2 platform is powerful enough to process the inputs of 12 video cameras, plus lidar, radar and ultrasonic sensors fusing them to accurately detect objects, identify them, determine where the car is relative to the world around it, and then calculate its optimal path for safe travel.

According to Nvidia, the combination of Tegra CPU and and Pascal GPU processors can deliver up to 24 trillion deep learning operations per second, which are specialized instructions that accelerate the math used in the deep learning and network interference. That computing power is equivalent to 150 MacBook Pro, or over 100 times more computational horsepower that the previous generation device. The DRIVE PX 2's deep learning capabilities enable it to quickly learn how to address the challenges of everyday driving such as unexpected road debris, erratic drivers and construction zones.

Deep learning also can afford to process data well in environments where traditional computer vision technologies don't work properly like poor weather conditions like rain, snow, and fog as well as difficult lighting conditions like sunrise, sunset and extreme darkness.

Drive PX 2's multiple-precision GPU architecture is capable of processing up to 8 trillion operations per second, for example, allowing car makers to address the full breadth of autonomous driving algorithm including sensor fusion, localization and path planning.

At the heart of the platform is the DriveWorks, a suite of software tools, libraries and modules that accelerate development of and testing of autonomous vehicles, enabling sensor calibration, acquisition of surround data synchronization, recording and then processing streams of sensor data through a complex pipeline of algorithms running on all of the DRIVE 2's specialized and general purpose processors.

The chip maker is also offering DIGITS, a tool for developing, training and visualizing deep neural networks that can run on any NVIDIA GPU-based system -- from PCs and supercomputers to Amazon Web Services and the recently announced Facebook Big Sur Open Rack-compatible hardware.

The trained neural net model runs on NVIDIA DRIVE PX 2 within the car.

Since NVIDIA delivered the first-generation DRIVE PX last summer, more than 50 automakers, tier 1 suppliers, developers and research institutions have adopted NVIDIA's AI platform for autonomous driving development.

They are praising its performance, capabilities and ease of development.

"Using NVIDIA's DIGITS deep learning platform, in less than four hours we achieved over 96% accuracy using Ruhr University Bochum's traffic sign database. While others invested years of development to achieve similar levels of perception with classical computer vision algorithms, we have been able to do it at the speed of light, " said, Matthias Rudolph, director of Architecture Driver Assistance Systems at Audi

"BMW is exploring the use of deep learning for a wide range of automotive use cases, from autonomous driving to quality inspection in manufacturing. The ability to rapidly train deep neural networks on vast amounts of data is critical. Using an NVIDIA GPU cluster equipped with NVIDIA DIGITS, we are achieving excellent results," said Uwe Higgen, head of BMW Group Technology Office USA.

"Due to deep learning, we brought the vehicle's environment perception a significant step closer to human performance and exceed the performance of classic computer vision, " said Ralf G. Herrtwich, director of Vehicle Automation at Daimler

"Deep learning on NVIDIA DIGITS has allowed for a 30X enhancement in training pedestrian detection algorithms, which are being further tested and developed as we move them onto NVIDIA DRIVE PX," said Dragos Maciuca, technical director of Ford Research and Innovation Center

The DRIVE PX 2 development engine will be generally available in the fourth quarter of 2016.