In his opening keynote speech for the 2016 Intel Developer Forum held in San Francisco on August 16, he took wraps off a new headset gear platform called Project Alloy, a prototype that comes built with a full-scale built-in computer and various sensors to create what he dubbed as “merged reality”

The ‘merged reality’ is where digitally bitmapped virtual graphics and real object images are superimposed with each other to enable users to interact with digital graphics in virtual world.

In his short on-stage demo, Brian Krzanich showed how the images of his hands are superimposed in the virtual world, as it built-in front-facing RealSense 3D depth camera captured them in the 3 dimension and its built-in CPU calculate and immerse them into the virtual world.

"Merged reality is about more natural ways of interacting with and manipulating virtual environments," he said in his blog post.

Applications are plentiful, and he illustrated how people will toy with the technology on his blog published on Medium, exemplifying a few cases available such as virtual trip to museums and virtual tennis playing practices.

What set itself apart from other commercially available virtual-reality headsets on the market is that you don’t need a controller, a separate high-performing computing device, and dozens of external sensors to capture your body gesture and movement in the 3 dimension.

Intel’s RealSense depth-sensing technology and a built-in CPU work in sync to perform all what the merged reality is supposed to do.

Yet, the technology has still a long way to go before it debuts on a commercial scene, because the built-in CPU is not strong enough to perform the parallel computational works like image rendering and ray tracing of massive 3D image data streams. The prototype’s CPU was so painfully slow to perform that task, indicating that commercialization is still far way. Reliable and relevant software is yet unavailable, either.

To perfect the human to machine interaction further, the California-based chip maker is now working together with Microsoft to build software that will support Project Alloy. Currently the software is in development with Microsoft’s Windows Holographic system and Microsoft’s AR solution known as Hololens.

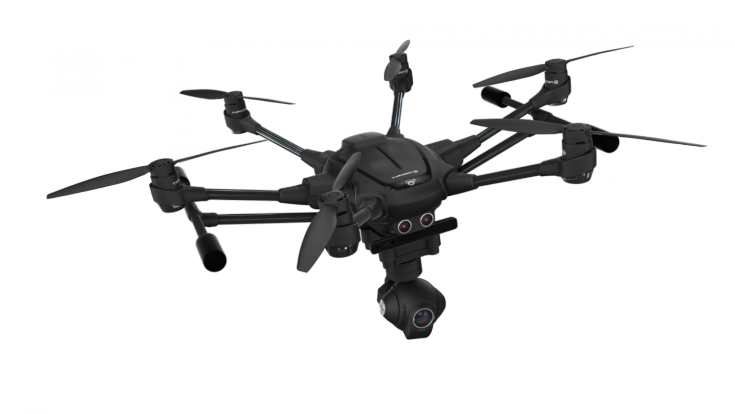

Intel also demonstrated how its RealSense technology will reshape the user interface of many devices to make them more intelligent. To be used with drones, for example, it will help intelligent obstacle navigation to avoid objects and plot alternative course around obstacles.

To help system vendors to easily build their applications around RealSense, Intel A candy-bar-sized development kit named as Euclid that comes integrated with RealSense technology.

The kit is a sort of a pre-defined Lego block-like development board that has a pair of CPU and OS platform, RealSense sensor chips, and other components seated on a board. System developers buy this board and add and plug in their own hardware and software features to differentiate their products from competition.

The chip maker also unveiled Intel Joule which is a high-performance system-on-module, or SOM, a small-and-compact circuit board. The SOM is the development board that helps system designers to develop applications that require high-end edge computing like drones, industrial IoT, VR, and AR.