(iTers News) - Self-driving cars are no long a pipe dream on paper, but is rapidly becoming an immediate commercial reality. Although car makers have still a long way to go before they roll out the self-driving autonomous cars, chip makers are piecing together key silicon building blocks one by one to deliver on the vision.

A case in point is Freescale Semiconductor, Inc. The world’s No. 1 automotive chip maker has taped out a vision microprocessor chip called as S32V in what the chip maker said is the world’s first automotive vision SoC.

Coming complete with CPU and GPU cores, image signal and cognition processors, sensor fusion MCUs, and other IOs and memory peripherals, the S32V is especially designed to power current ADAS as well as a next generation of intelligent, self-aware ADAS, or advanced driver assistant system.

Unlike today‘s ADAS that is just to assist car drivers in driving and parking their cars safely, the next generation of self-aware ADAS has to get intelligent enough to make decisions on its own either to slam on the brake to stop cars in emergency situation in what’s called as a self-aware co-pilot system.

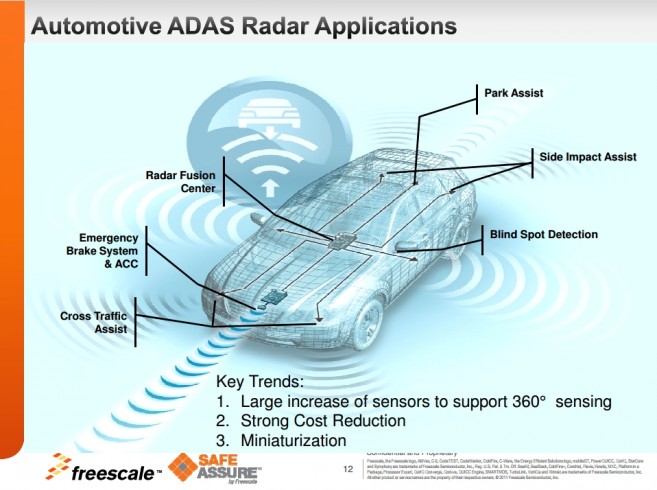

EBS, or emergency brake system and automatic cruise control, or ACC is a prime example of the next generation of self-aware ADAS. When the self-aware ADAS system detects something on the road ahead, the EBS automatically slams on the brake even if drivers don’t recognize it.

The implementation of EBS in the car was already legalized in the EU countries, as it can see and make decision by itself to put on a brake even if car drivers don’t take notices of pedestrians waking across the road and slam on the brake.

Highway platoon system is another example of the self-aware ADAS, under which a driver-piloted lead truck wirelessly tow a fleet of unmanned trucks behind in line as if a locomotive drag a line of railroad cars.

“The semi-autonomous co-pilot system is so complex and complicated that it is hard to implement. Yet, car makers and silicon chip makers are working together to implement it, “added Jeremy Park.

As the jargon of SoC, or system on chip suggests, Freescale’s SV26 vision SoC is a microscopic representation of what the intelligent self-aware ADAS looks like.

Due out in June for sample shipments, the S26 vision single chip SoC comes built with five main hardware circuitry blocks including an image signal processor platform, a CPU platform, a vision platform, a hardware security module, and a wireless connectivity platform.

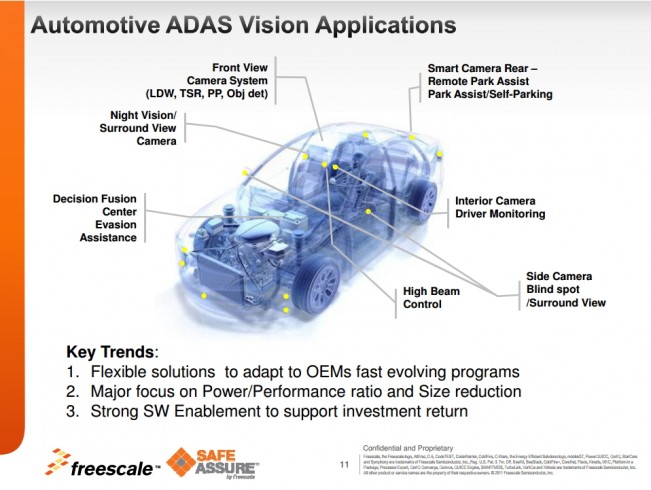

True enough, ADAS is a concentration of vision image sensors like front-and rear-view cameras and night vision and surround view cameras to warn drivers of their cars departing lanes and help them to detect blind spots and recognize traffic signals.

It also comes awash in other sensors like ultra sonic, radar and LiDAR to ensure optimal resolution and image recognition accuracy. Connectivity and security system are forming the crucial parts of the ADAS, too. not only to link data streams from various sensors, but also protect CPU platform from hacking.

So much so that it requires lots of computational power, motion vector calculation, error-checking, hardware fault detection, secure key algorithm processing, a network-grade crypto engine, and MIPI interface processing

For example, its image signal platform comes complete with an image signal processor, MIPI dual camera interfaces and two CogniVue APEX-642 image cognition processors to process various image signals from a variety of MIPI-interfaced external cameras like front-and rear-view cameras.

Especially, the APEX-642 image cognition processors are to cognize and process the characteristics of various image signals like corners, edges and shapes to reconstruct the most optimal images.

The APEX-642 image cognition processors are programmable so that proven algorithms can be easily implemented into it.

Coming embedded with 4 ARM Cortex-A53 processors, ARM Cortex-M4, and L1/L2 cache memory the CPU platform is to recognize objects like pedestrians, estimate and track motions, implement algorithms, combine other signals from other sensor modules, and finally judge and understand them.

ARM Cortex A53 cores are to do computational tasks, while ARM Cortex M4 is a sort of sensor fusion MCU to process inputs and outputs and connectivity between various sensors

The vision platform incorporates Vivante’s GS3000 GPUs and Video codec H.264 technology to output 2D or 3D graphical images on a user’s display screen.

Its software platform includes Green Hills Software’s INTEGRITY RTOS, Neusoft Corporation’s advanced real-time object recognition algorithm, and OPEN CL.

The INTEGRITY is a safety-certified, real-time operating system that comes with a powerful set of ISO 26262, ASIL-D certified development tool with highly optimized target solutions.

The real-time object recognition algorithm is to seamlessly detect partial objects, allowing the S32V to interpret and distinguish between road hazards and pedestrian risks.

Another beauty of the SV26 vision SoC is its security and safety features that comply with the stringent ISO 26262 functional safety standards.

Coming built with ARM core, its security block implements an encryption engine for communications to protect the chip from outside hackers. Its safety block comes embedded with FCCU and ML BIST, safe DMA, and debug and trace units

A short for a built-in software test, the BIST is a sort of software to test diagnose by itself whether CPUs and other peripherals are normally operating as programmed, or not.

Standing for fault connect control unit, the FCCU is to correct and control something problematic, or faults on its own.

What also sets the S32V SoC apart from other rival chips is its ability to capture and combine image signals from various cameras with other sensing data streams from radars or LiDAR to accurately identify what the objects are and make right decisions on where they are.

Coming embedded with Zipwire, FlexRay, gigabit Ethernet, and LinFlex, its connectivity block is to enable a vision chip module to communicate with other sensor modules to combine and fusion different data signals.

The Zipwire is a protocol to enable 300Mbps wired communications with other modules.

The S32V vision SoC is fabriacted with a 28nm process technology.

"The rollout of the S32V SoC marks the first step in the concerted efforts of car makers and silicon content suppliers to deliver on the vision of self-driving cars," stressed Jeremy Park.